AI community management used to sound like a futuristic perk. Now, it’s quietly running your community; at scale, behind the scenes, and often without real oversight.

For associations, chambers of commerce, and other member-based organizations, it’s been pitched as the fix-all: faster moderation, smarter feedback, better engagement. But something feels off. Conversations start to sound sterile. Feedback loops lose meaning. Human connection starts to slip through the cracks.

AI isn’t the bad guy. But it’s not neutral either. Adopt it blindly, and it becomes a black box; reshaping relationships, filtering voices, and shifting power away from people toward algorithms.

This is a necessary pause. A gut check on what AI community management is actually doing today in spaces built on trust, dialogue, and shared values.

Let’s crack that black box open.

Every AI Community Management Model Is a Mirror. But What Exactly Is It Reflecting?

The promise of AI community management is that it brings structure. Order. Fairness. That by taking the messy unpredictability of human moderation and replacing it with algorithmic logic, we create more consistent, scalable experiences.

That’s the promise.

Every AI model is a mirror. It reflects data and the worldview encoded in that data: flaws, omissions, cultural assumptions, and all. And when your AI model is trained on what the internet already thinks about gender, race, identity, and language, you’re scaling historical bias.

Let’s break that down.

In theory, AI systems moderate communities by applying clear “rules.” But those rules are derived from past patterns; annotated datasets labeled by humans, scraped from public forums, media sites, and chat logs. And those past patterns often carry deeply embedded prejudice.

Researchers have found that large language models consistently penalize African American Vernacular English (AAVE), misinterpret LGBTQ+ topics as unsafe, and downrank posts related to indigenous rights, political dissent, and disability activism. These are baked-in defaults.

So, what happens when you hand over your member engagement platform to a system like that?

What happens is silence; subtle, pervasive silence.

Culturally specific phrases get flagged. Sarcastic jokes get removed. Members who already feel underrepresented see their voices minimized by “automated filters.” But there’s no escalation path, no real explanation. Just a quiet nudge to stop speaking up.

Bias in AI Community Management Is a Moral and Performance Issue.

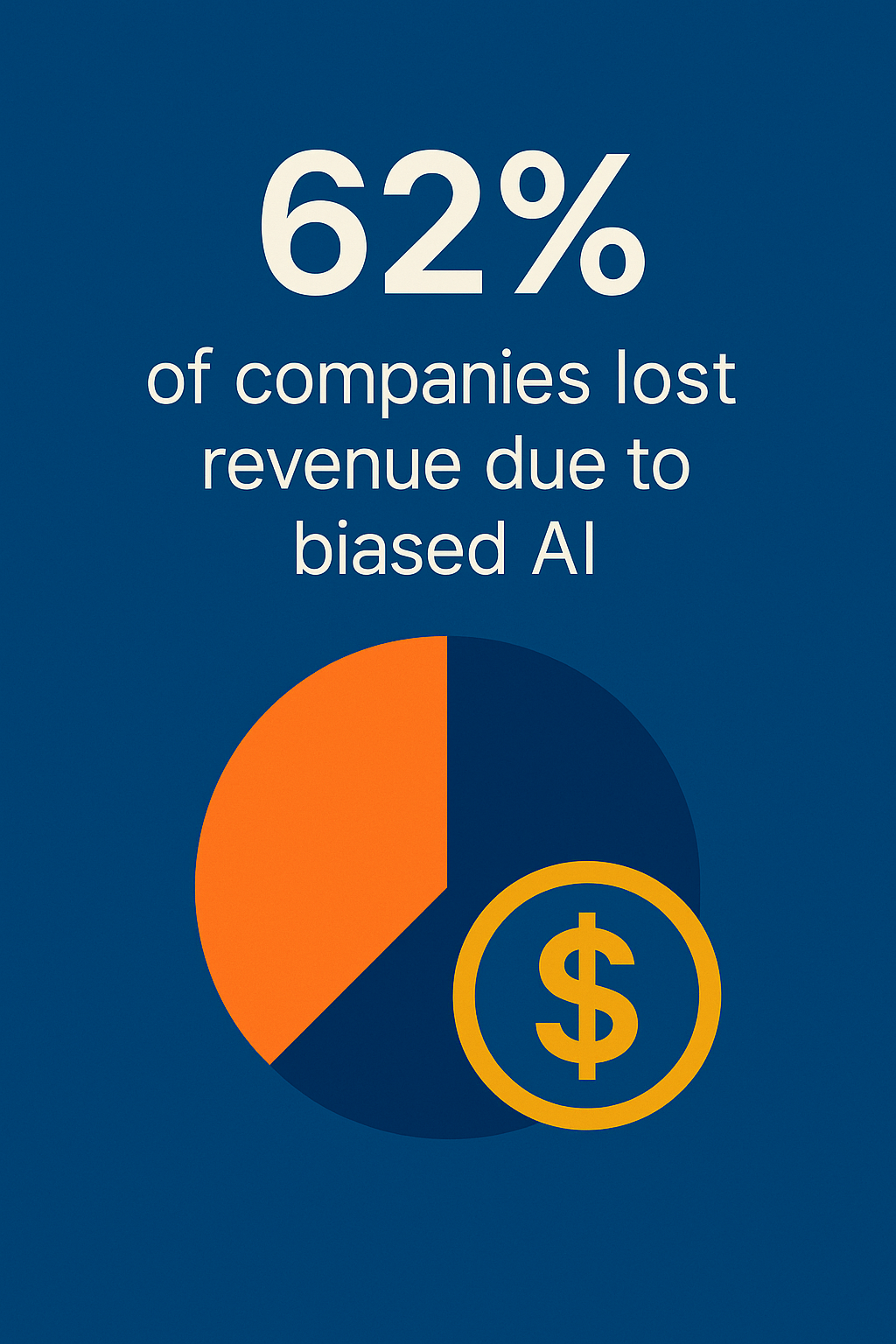

A 2024 survey by InformationWeek revealed hard consequences for organizations using biased AI:

62% of companies reported losing revenue due to unfair or inaccurate AI decision-making

61% lost customers, often those in underrepresented groups

43% saw internal pushback or resignation due to employee mistrust of automated systems

Legal costs followed, with 35% of respondents paying settlements or dealing with lawsuits related to AI bias

And 6% endured brand damage after the media spotlighted biased platform behavior

That’s bad PR and structural failure.

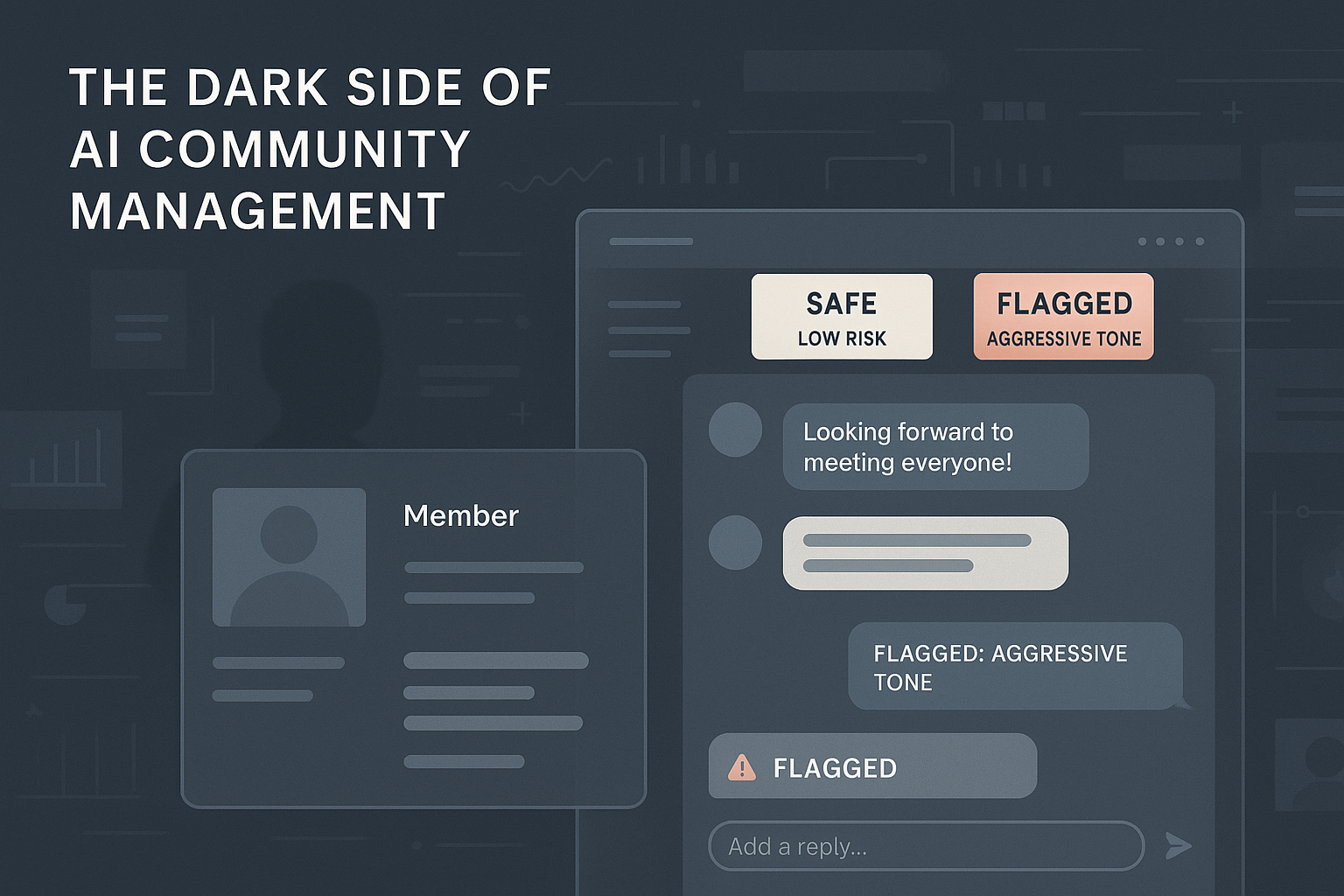

For associations, member networks, and chambers of commerce, this plays out more quietly; but just as destructively. A new member joins and gets flagged for “aggressive tone” because they used language from their native dialect. A post about DEI initiatives disappears because the model flagged certain terms as “divisive.” An honest, vulnerable comment about discrimination gets mislabeled as a complaint.

Eventually, those members stop posting. Or they leave altogether.

In AI Community Management, Small Errors Are Signals.

One of the most dangerous misconceptions about AI is that it “mostly gets it right.” But in communities, accuracy isn’t enough. The margin for error is incredibly thin, because people remember when they’re excluded, especially when they’re already on the margins.

And moderation is an enforcement and is signaling what kind of speech, and what kind of people, are welcome.

That’s why fairness in AI community management is a baseline requirement. If your platform is built to welcome all but silently exclude some, you’re gatekeeping community with invisible rules no one voted on and few can challenge.

Bias, When Scaled, Becomes Infrastructure. And Infrastructure Is Hard to Change.

Once biased models are embedded into your community tools, they cause occasional problems and define your culture.

Because every platform shape behavior. What gets promoted. What gets ignored. What gets punished.

And if those choices are guided by AI systems trained on flawed data, you end up building something that feels efficient on the surface but subtly reinforces the very inequities your organization claims to oppose.

At Glue Up, we believe the only way to responsibly scale AI is by embedding bias audits into the development process. That means regularly testing AI outputs for fairness across demographic groups. That means human oversight. That means making it easy for members to appeal a moderation decision and be heard.

More importantly, it means asking deeper questions before you install the next plugin or AI assistant:

Who trained this system?

Whose values does it reflect?

Who gets harmed if something is wrong?

This Isn’t About Rejecting AI. It’s About Reclaiming Accountability.

AI has a place in community management, just not on autopilot. Not when it determines what’s “safe” to say. Not when it shapes who feels seen. And not when it risks silencing the exact people your community needs most.

Bias is a side effect of AI and a design decision. And if you’re using AI without interrogating that design, then bias isn’t the system’s fault. It’s yours.

The organizations that will thrive in the next decade aren’t the ones chasing the latest tool. They’re the ones asking better questions about the tools they already use.

Because in member-based organizations, trust isn’t a KPI. It’s everything.

And once you lose it, no AI can bring it back.

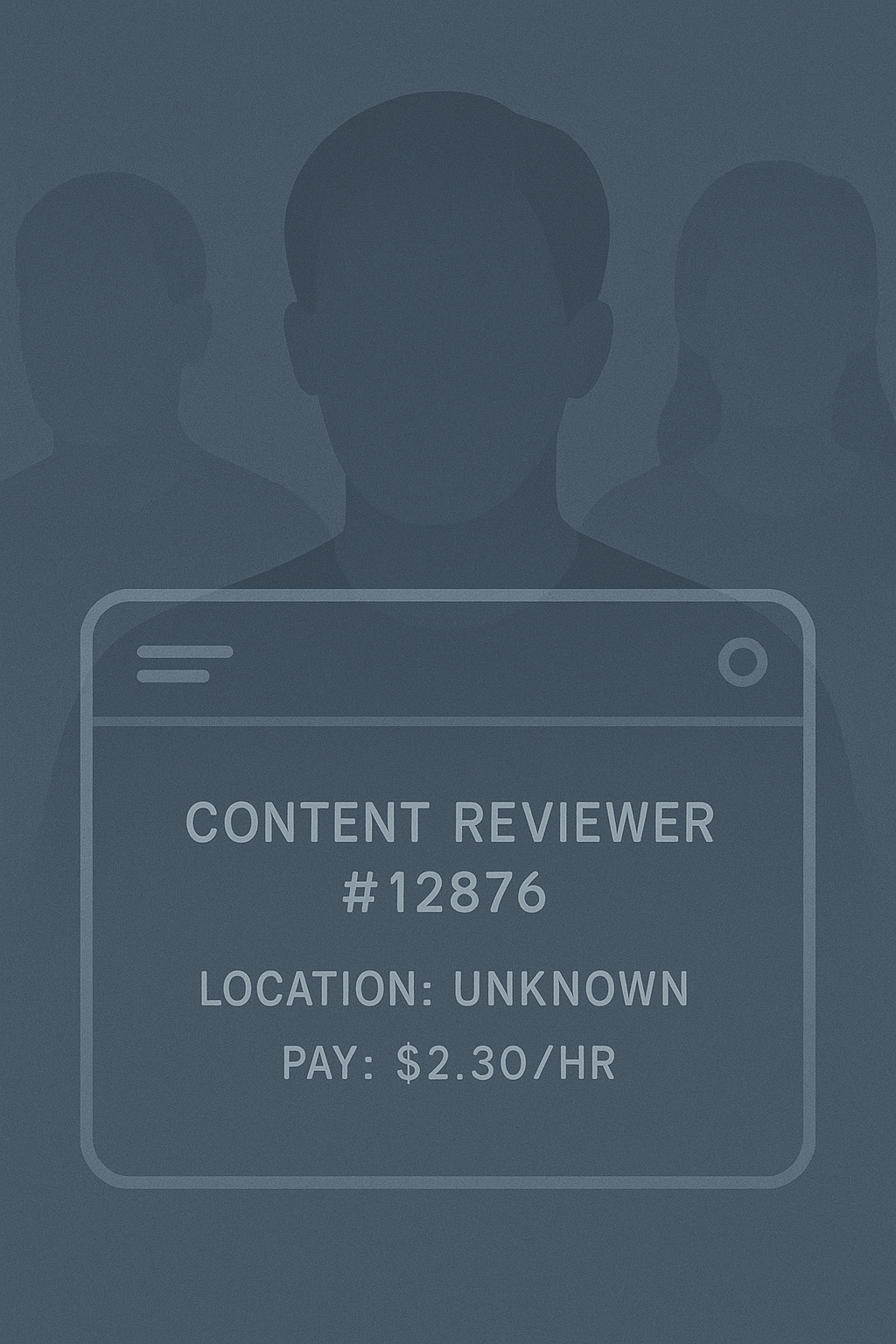

Behind Every ‘Automated’ Moderation Tool Is an Underpaid Human.

The dirty secret of AI moderation? Most of it isn’t AI.

It’s ghost work. Human beings in the Philippines, Kenya, Venezuela, and India manually reviewing disturbing content, labeling edge cases, and feeding the data back into the system. They’re paid $1 to $3 an hour, often working without benefits or mental health support.

In Glue Up, we found that AI is only as responsible as its inputs; and too often, those inputs come at someone else's human cost. These ghost workers are invisible to end users, but their labor fuels every “automated” community experience you see.

If your association values DEI, labor rights, or ethics; you can’t separate your AI tools from the humans behind them.

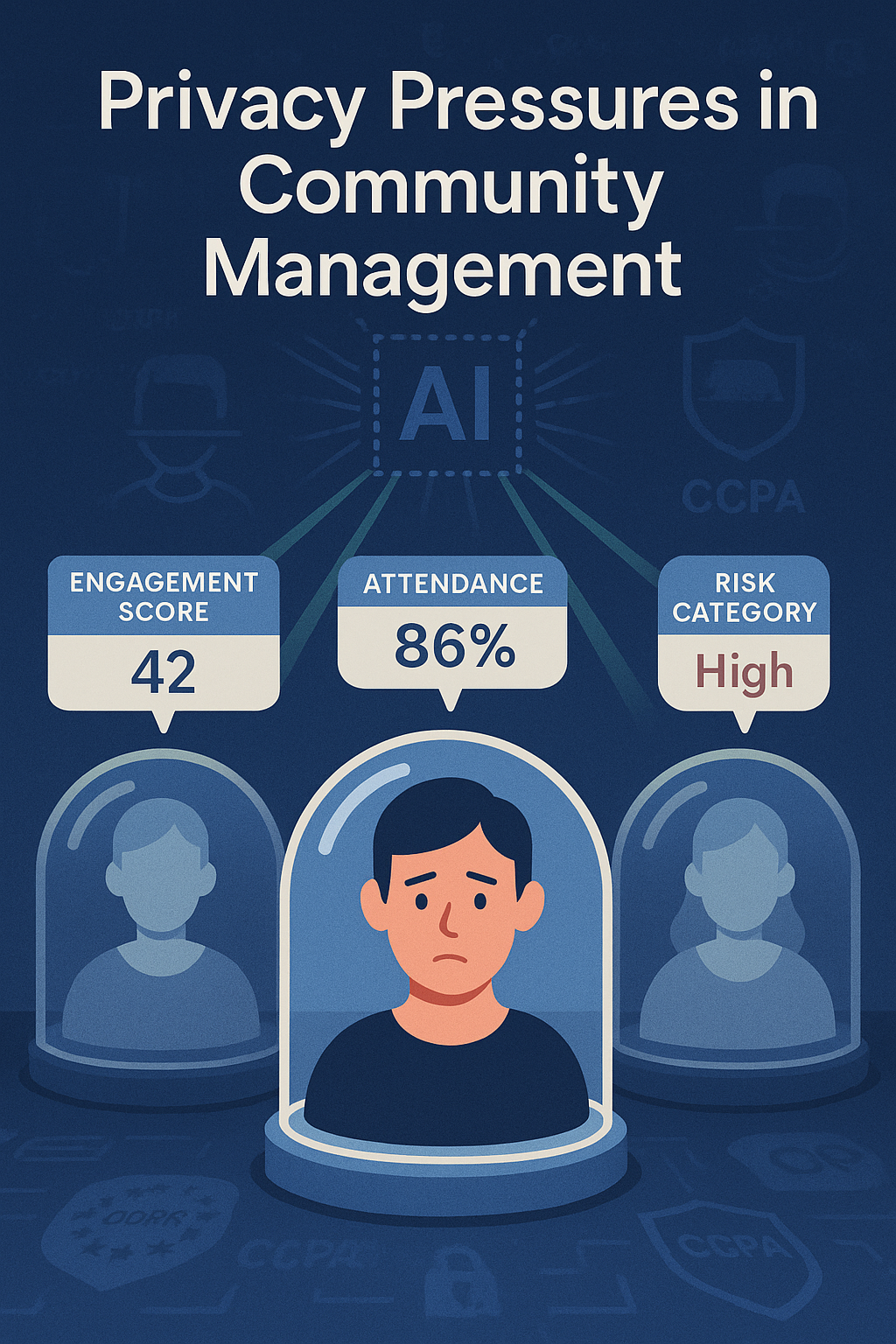

AI Community Management Pressures Privacy

The very act of “smarter community management” often means collecting more data; conversation history, behavior patterns, sentiment analysis, attendance records, even predicted churn risk.

Most users have no idea how their data is being used.

Meta is already facing legal threats over plans to use Instagram and Facebook user data in the EU to train its generative AI tools. If Big Tech is still getting this wrong, what are the odds your third-party AI vendor is fully compliant?

Privacy regulations like GDPR and CCPA are only growing stricter. That means your use of AI in community engagement needs built-in protections; clear disclosures, audit trails, and options for users to opt out of AI-based profiling.

Glue Up’s platform gives associations full control over what’s collected, why, and how it's used. But not every system does. Especially the cheap ones.

AI Community Management Reads the Dataset

Let’s start with what humans know instinctively.

A skilled community manager can walk into a virtual room, whether it’s a Slack channel, LinkedIn thread, or members-only forum, and feel the temperature instantly. They can tell when someone’s being cheeky versus combative. They understand cultural shorthand, catch subtle shifts in tone, and know the difference between critique and cruelty. That’s not just experience. That’s emotional literacy.

AI community management, on the other hand, doesn’t read the room. It reads the dataset.

And what’s in that dataset?

Historical bias. Out-of-context behavior. Flattened language. Often, training data pulled from public sources that don’t reflect your member base, your culture, or your values.

That disconnect shows up in ways that might seem small, until they’re not.

It Starts with Misread Tone.

Maybe someone posts a sarcastic comment during a heated conversation. A human moderator might roll their eyes and move on. An AI system trained on literal interpretation could flag that as “hostile behavior.”

Now imagine it’s a new member. A person from a different country or generation. They’re met not with a conversation, but a warning, or worse, a ban. What they learn is that this space doesn’t understand them. So, they stopped speaking up.

And this happens more often than you'd think.

Researchers from the Berkman Klein Center at Harvard and the Oxford Internet Institute have found that automated moderation systems disproportionately misinterpret humor, cultural idioms, and non-Western communication styles as inappropriate. The result over-moderationa cultural erasure.

Then Come the Silences.

It’s not always about who gets removed. Sometimes it’s about who self-censors. When members learn that certain topics trigger warnings, they avoid them altogether. Heated discussions; the kind that move ideas forward, get diluted into vanilla consensus.

What’s left are “safe” conversations that avoid depth, challenge, or vulnerability. And suddenly, your community is quiet for all the wrong reasons.

Legitimate Emotion Gets Punished.

AI community management often penalizes passion.

If someone posts about a personal experience, racism in the workplace, burnout as a nonprofit leader, or the challenges of being an immigrant entrepreneur, they’re likely doing it in a tone that’s raw. Urgent. Emotional.

But AI moderation tools trained to favor "neutral" or "professional" speech often interpret that intensity as aggression or hostility. So, the post is downranked. Or flagged. Or deleted entirely.

That’s a glitch and a values failure.

You're telling your most engaged members; the ones willing to show up and be real, that there’s no place for their full selves in your space.

Nuance Is a Leadership Responsibility.

Nuance isn’t something you add later. It has to be baked into how your community operates. And if you’re relying on an AI tool that can’t recognize context, culture, or complexity, then your moderation strategy isn’t modern. It’s brittle.

Yes, AI can help scale community management. But scale without sensitivity isn’t a solution. It’s a liability.

Here’s what’s at stake:

Missed conversations that could’ve deepened connection

Lost members who feel policed rather than welcomed

A false sense of safety that hides how exclusionary the experience has become

The irony is that AI was supposed to increase inclusion by lowering the barrier to participation, personalizing engagement, and ensuring fairness.

But when it misinterprets the very things that make human conversation rich: emotion, disagreement, vulnerability; it does the opposite. It sanitizes. It dulls. It erases.

What Ethical AI Community Management Should Look Like

Let’s reframe the narrative.

If your organization is serious about community, then it needs to be serious about how AI is shaping that community. That means:

Training AI on culturally diverse, multilingual, and domain-specific data

Keeping a human-in-the-loop for edge cases and sensitive conversations

Allowing members to appeal moderation decisions, and explaining why something was flagged

Reframing community policies to explicitly recognize tone, context, and culture as essential dimensions of engagement

Most importantly, it means treating AI not as an infallible moderator but as a blunt instrument, one that needs sharpening through transparency, oversight, and reflection.

At Glue Up, we design our AI tools for associations, chambers, and professional groups that understand this balance. AI should help you listen better. It should free up your team to focus on relationships and reduce ticket volume.

AI doesn’t have to replace the room. It just needs to support the people in it.

People don’t leave communities because someone disagrees with them.

They leave when they feel misunderstood. Misjudged. Silenced.

And no keyword-targeted onboarding sequence or automated badge system can undo that.

AI community management has potential. But without human empathy, it becomes nothing more than a mechanical gatekeeper: efficient, scalable, and deeply out of touch.

And if you're building a member experience that claims to be for everyone, but only recognizes the voices in your dataset?

Then you're not managing a community. You're managing compliance.

And that’s not leadership. That’s avoidance.

When Everything Is Personalized, Nothing Feels Personal

The greatest strength of AI, its ability to tailor experiences at scale, is also its quietest threat.

AI community management systems are designed to optimize relevance. They surface posts you’re likely to engage with, recommend members who share your background, and nudge you toward content that fits your preferences. But relevance isn’t the same as connection. And personalization, unchecked, can feel more like isolation than intimacy.

Because when algorithms serve only what you’ve already liked, you stop growing. You stop seeing differences. And slowly, your community stops challenging you.

This is how echo chambers are built by drift.

Personalization Becomes Prediction. And Prediction Becomes a Constraint.

Here’s how it plays out in member organizations:

Over-personalized event suggestions start directing members to the same types of webinars and meetups, based on past clicks.

Narrow networking prompts users to those in similar industries or regions, reinforcing silos instead of cross-pollination.

“Safe” recommendations prioritize engagement rates over content diversity, meaning controversial, but important, topics rarely surface.

It’s math. And that’s the issue.

AI doesn’t aim for diversity. It aims for confidence. If the model believes you’ll likely click, it serves it again. If you didn’t click the first time, it probably won’t try twice. Your entire experience becomes a closed loop: tailored, frictionless, and increasingly predictable.

And in a social media feed, maybe that’s tolerable. But in an association? A chamber of commerce? A global network? That’s a missed opportunity. Worse, it’s a design flaw that undermines your entire purpose.

Communities Aren’t Meant to Be Mirrors. They’re Meant to Be Collisions.

The best communities are slightly uncomfortable.

They expose you to members you wouldn’t otherwise meet. Ideas you hadn’t considered. Business models you don’t yet understand. That’s where innovation, empathy, and professional growth live—at the edge of familiarity.

But AI community management tools, when left to run without human intention, erode that edge.

A chamber of commerce should introduce a young tech founder to a legacy manufacturer. An association should put a sustainability advocate in the same thread as a logistics executive. These aren’t random pairings. They’re engineered frictions. And they matter.

When you optimize for alignment too early, you miss the magic of dissonance.

Comfort Isn’t Connection. And Safety Isn’t Growth.

There’s a reason high-performing teams aren’t made of like-minded people. And the same is true for high-impact communities. Real engagement doesn’t come from being served what you expect. It comes from being stretched.

But AI, by default, avoids stretching. It leans into safety. And that’s fine for ad targeting, but it’s a problem for member-driven organizations that exist to broaden horizons, not narrow them.

This is why human oversight is a safety net and a strategic necessity.

Your team needs to actively curate what the algorithm cannot see:

Underserved voices

Emerging industries

Unpopular but necessary conversations

Cross-sector connections that algorithms would never recommend

Glue Up’s AI community management tools were built with that balance in mind. Our system adapts to behavior but still allows teams to prioritize intentional diversity. It’s smart tech and directional. Which matters, because personalization without purpose doesn’t serve your mission. It dilutes it.

The Future of Community Is Curiosity.

Yes, AI makes personalization easy. But leadership isn’t about ease. It’s about expansion.

If your goal is to increase engagement at any cost, personalization works. But if your goal is to build resilient, inclusive, and idea-rich communities; then the cost of overpersonalization is too high.

It’s tempting to mistake relevance for meaning. But meaning takes effort. And in communities, that effort is the point.

The question isn’t “What should we show this member next?”

The question is “What could change if they saw something new?”

That’s the shift from algorithm to intention. From AI that optimizes for what is, to leadership that imagines what could be.

And that’s the kind of growth no recommendation engine can automate.

Overreliance on AI Community Management Is Brittle.

Let’s talk about systems failure.

AI works great, until it doesn’t. You build your engagement funnel around automated onboarding, automated event reminders, automated polls, automated retention emails... and one change in your data model breaks everything.

Worse: The moment a real conflict or member complaint arises; there’s no human context to fall back on. No one remembers the conversation. No one saw the nuance. All you have is a transcript and a confidence score from a machine.

At Glue Up, we’ve worked with organizations that tried to scale community growth using “AI-first” platforms. It worked, until the platform made a moderation mistake that went viral. By the time humans got involved, the damage was already done.

Efficiency without resilience is risky.

What We’re Really Automating: Empathy

The hardest conversations are the most important ones.

When members give critical feedback, disagree publicly, or express emotion; those are the moments that make or break a community. And those are the very interactions most AI systems are trained to avoid, downrank, or delete.

That’s a moderation issue and a leadership one.

When you outsource empathy, you outsource identity. And for member organizations, that’s dangerous. Your members don’t want a frictionless experience. They want to feel heard, seen, understood, and safe enough to speak freely.

That takes people.

Mental Health Is a User and a Systems Design Issue

We talked a lot about engagement. Click. Sessions. Comments per post. But what’s less discussed, and arguably more important, is what those interactions feel like.

AI community management filters out toxicity or optimizes what members see. It subtly reshapes the emotional landscape of the entire community. And the more AI governs the experience, the more human nuance falls through the cracks.

On the surface, everything seems fine. Participation looks high. Notifications are being opened. Forums are active. But underneath that is something harder to measure: fatigue, isolation, and quiet disconnection.

AI-Driven Systems Change How We Relate to the Platform and to Each Other

Recent research from the American Psychological Association revealed something troubling: employees who interact regularly with AI systems report higher rates of loneliness, insomnia, and substance use. The culprit isn’t overt harm. It’s emotional displacement. AI replaces small but meaningful moments of human connection with cold, transactional exchanges.

In the context of community platforms, this manifests in ways we’ve normalized:

Gamified badge systems that trigger dopamine but not belonging

Push notifications that simulate urgency but don’t build trust

Engagement prompts that reward activity but don’t reflect intention

Filtered threads where vulnerable or off-topic comments quietly disappear

Members start to ask themselves: Is anyone really listening? Or is this all just signals in a feed?

The result is participation without presence. Noise without connection.

Designing for Metrics Isn’t the Same as Designing for Meaning

There’s a reason so many online communities feel hollow, even when they look active. We’ve optimized them for retention, not resonance.

AI community management often focuses on behavioral triggers, what keeps people clicking, what makes them return. But that lens treats humans like variables. It mistakes attention for attachment. And it reduces emotional complexity into a series of “engagement events” rather than actual relationships.

But people don’t join chambers of commerce, associations, or professional networks just to complete tasks. They join to belong. They join for moments of solidarity, recognition, mentorship, and insight.

No badge can replicate that. No algorithm can guarantee it.

The Silence After the Automation Is Telling

One of the most dangerous consequences of AI-driven community systems is quiet erosion; the slow, unnoticed drop-off of meaningful voices.

Not the loudest. The ones who used to check in often. Ask good questions. Support others. Over time, they stop logging in. Not because they were ignored, but because they were over-processed. Their experiences got turned into data points, their stories segmented into “sentiment,” and their feedback filtered as “not actionable.”

It’s the kind of churn that doesn’t show up in dashboards. But it shows up in culture. And once your most thoughtful contributors disappear, the soul of your community follows.

We Must Ask Better Design Questions

This is an argument against thoughtless design.

If your AI nudges are increasing usage but harming well-being, are you actually succeeding? If your platform rewards surface-level interaction but discourages vulnerability, what are you really building?

At Glue Up, we believe in ethical AI that supports human connection. That means:

Giving members control over their experience

Reducing algorithmic noise and allowing space for organic conversation

Designing for rest and reflection

Creating space where presence matters more than performance

You can scale a platform. But you cannot scale trust. You must earn it, again and again, with systems that respect emotional realities and behavioral metrics.

The Future of Community Leadership Requires Emotional and Machine Intelligence

If your AI-driven community system makes people feel more connected, more understood, more grounded, that’s real success. If it makes them feel exhausted, pressured, or unseen; then no KPI can justify it.

Community health is human health. And AI community management must be accountable for both.

Because at the end of the day, what keeps people in a community isn’t a notification. It’s a sense of being known.

And that, no algorithm can fake.

So, What Does Responsible AI Community Management Look Like?

We’ve seen the risks. We’ve acknowledged the failures. Now the question is: what comes next?

Because AI community management isn’t going away. The tools are here. The systems are in place. The question is no longer whether we use AI, it’s how we use it without compromising the very things communities are built on: trust, connection, and care.

At Glue Up, we don’t see ethics as a compliance box to tick. We see it as a design principle. A leadership stance. A line in the sand. Here's what that looks like in practice:

Transparency Is Foundational

Members deserve to know when AI is shaping their experience.

Is a machine deciding which of their posts are visible?

Is their profile being scored, segmented, or tagged?

What happens to their data after they log out?

Transparency is a good UX and community respect. When members understand how AI moderation works, they’re more likely to trust the platform, engage meaningfully, and raise concerns early. That’s insight.

Human-In-The-Loop Is Non-negotiable

Automation should accelerate productivity. In AI community management, irreversible decisions, like suspensions, bans, or hidden content; must include a human checkpoint, because community decisions carry emotional weight.

The difference between a warning and a welcome-back message can decide whether someone stays or leaves. You don’t outsource that to an algorithm.

Context-Aware AI Starts with Diverse, Intentional Data

AI can’t guess what fairness looks like. It has to be taught. That means curating datasets that reflect the real diversity of your member base: culturally, behaviorally, and experientially.

It also means running regular audits. Looking at edge cases. Asking who gets flagged the most, and why. Without this, you're not managing a community. You’re reinforcing a pattern.

At Glue Up, our AI systems are designed with bias checks and feedback loops so that what we build reflects actual people.

Data Dignity Is the New Trust Currency

You don’t need to know everything about a member to serve them well. You just need to collect the right things, at the right time, for the right reasons.

Responsible AI community management includes:

Minimal data capture: Avoiding surveillance culture

Secure storage: Especially for personally identifiable and behavioral data

Clear disclosures: Not buried in a terms-of-service PDF, but embedded in the user flow

When your data practices are respectful, your relationships deepen. Period.

Recognize the Humans Behind the Machine

AI doesn't run itself. It relies on people: data labelers, community managers, UX writers, policy thinkers. Often, their contributions are invisible. Worse, in some parts of the industry, they’re undervalued and underpaid.

Responsible AI means recognizing the ghost work that powers your platform. It means supporting community teams with real tools, clear escalation pathways, and the authority to override automation when necessary.

Because the soul of a community doesn’t live in its codebase. It lives in its stewards.

AI Community Management Can Be Ethical. Humane. Useful. And Yes—Smart.

The conversation around AI is often framed in absolutes: disruption or dystopia, innovation or harm. But there’s a middle path, and it’s paved with intention.

We don’t need to abandon automation. We need to humanize it.

At Glue Up, our commitment is to help associations, chambers, and member organizations harness AI without losing what makes their communities thrive: empathy, dialogue, and shared purpose.

We build tools that moderate and elevate. That personalize and contextualize. That protects and preserves the human fabric of connection.

Because community is a responsibility.

And that responsibility doesn’t disappear just because the system now runs on code.

The Future of Community Is Still Human

AI doesn’t build relationships. It doesn’t earn trust. It doesn’t foster belonging.

That work still belongs to people.

If you're a membership director, executive leader, or association strategist, your responsibility is to preserve and protect the very conditions that make it possible. Conflict. Curiosity. Context. Accountability. These are the heart of the community itself.

AI community management can be helpful. It can be powerful. But it must always remain a tool in the service of people.

At Glue Up, we understand this distinction. It’s why our platform uses AI to enhance your work. To reduce noise. To surface insight.

Because true engagement isn’t something you scale with code. It’s something you sustain with care.

So, if you’re serious about growing a stronger, smarter, more human-centered organization, without compromising your values, we’re ready.

Book a demo with Glue Up. Let’s build communities that last; on your terms, with your leadership, and with technology that respects the people at the center.